A Discrete Approach to the Sum Over One Problem

January 9, 2018

Black Magic

Here is an astonishing true thing:

The average number of samples that must be drawn from a uniform distribution on before their sum exceeds one is equal to Euler’sEuler’s number, also know as Napier’s constant, was actually discovered by the Swiss mathematician Jacob Bernoulli during his studies of compound interest. It’s value is approximately 2.718281828. It is the basis of natural logarithms and curiously ubiquitous in mathematics. number, .

I first learned of this fact via a Tweet from @fermatslibrary, and it was a genuine shock. How in the world does Euler’s number emerge, for no apparent reason, in response to this innocuous question of probability? Can this even be true? And if it is, as I. I. Rabi famously saidRabi had other good, quotes, too: “My mother made me a scientist without ever intending to. Every other Jewish mother in Brooklyn would ask her child after school: So? Did you learn anything today? But not my mother. “Izzy,” she would say, “did you ask a good question today?” That difference — asking good questions — made me become a scientist.” of the discovery of the muon, “Who ordered that?!”.

And yet it is true, provably true, and somewhat well-known. Attached to that Tweet is an elegant (if terse) proof. Neal Grantham, who terms this the sum over one problem, takes a rather more direct approach, but arrives at the same delightful conclusion.

These proofs do not fail to convince, but I find them somehow viscerally unsatisfying. They offer little insight into why suddenly appears, in terms of anything else we know about . Of course assumes many guises, but it is perhaps most famously understood as the following limitFor an excellent overview of e, I recommend e: The Story of a Number, by Eli Maor. The number has a rich and fascinating history, which touches the lives of many great mathematicians. :

The proofs above fail to inform me who ordered that.

So how does the of Eq. make contact with our question of probability? I wondered if exploring a discrete version of the problem might demystify ’s bolt-from-the-blue arrival. This article chronicles these explorations and provides a more organicAccording to me. explanation for understanding of why is the obvious—or at least plausible—answer.

Alea Iacta Est

Here is the discrete version of the game. We roll an -sided die

until the cumulative sum of the throws exceeds .

Dice. These dice have six sides each, but

we’ll be considering here the more general problem of n-sided dice. We

ask, on average, how many times must we throw that die? This problem is at once

easier to conceptualize than its continuous counterpart, because it is

discrete, and much more difficult to actually calculate—because it is discrete.

Let be the random variable corresponding to the number of times we must throw the die until the sum of the throws first exceeds , the number of sides on the die. Ultimately we want to find the probability distribution — the probability that the sum of our throws first exceeds after exactly throws — because once we know this, computing the expected value of is straightforward via the usual definition:

Note the limits of summation: we’re running from to . There is zero chance of exceeding on the first roll; the best we can do there is itself. On the other hand, the sum is guaranteed to exceed after rolls, because each roll generates, at minimum, a value of oneNote that although the probability of this sum exceeding n on roll n+1 is unity, that’s not what we’re calculating. We’re calculating the probability that the sum first exceeds n on that roll, and not before, which will be less than one. .

Let represent the random variable corresponding to the roll. The key to calculating is to realize that it must equal the difference between the following probabilities:

But note that,

Using Eq. and Eq. , we can write as,

And now we have only to calculate the probability that the sum of throws is less than or equal to .

Counting Is Hard

We assume that the throws are independent events, so the possible outcomes are equally likely. But to compute the probability, we must know, of these outcomes, how many satisfy ? If we call this number , then the probability is,

So the problem, then, is to compute for arbitrary and .

To find , let’s first answer a slightly easier question: how many outcomes will sum to exactly using integers?

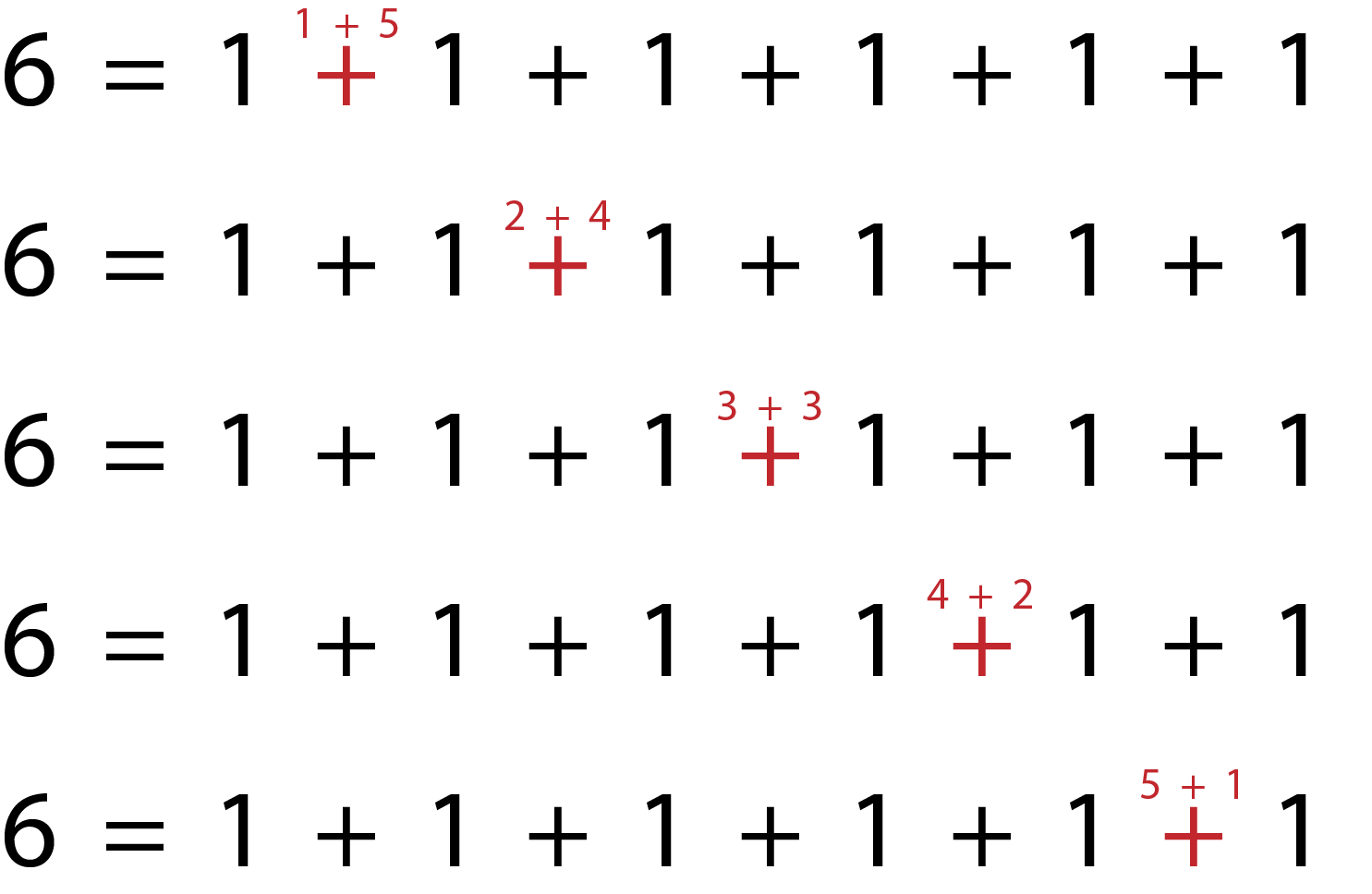

One way to attack this problem is to write out as the sum of

ones (which sum is guaranteed, of course, to sum to ),

and then consider how many ways we can partition those ones into different

groups. This is illustrated in the figure to the right, for the case of

and

The number of ways we can sum to six via two

positive integers is the number of ways we can select one plus sign from among

the five plus signs in the equation 6 = 1 + 1 + 1 + 1 + 1 + 1, if we regard the

selected plus sign as partitioning the sum into two pieces. The sum to the left

of the selected plus sign represents one number, and the sum to the right of

that plus sign represents the other. If we wanted to sum to exactly six using

three numbers, we’d find the number of ways to select two plus signs from the

five available; and so on.. It is easy to see that the partitioning is

equivalent to asking, “How many ways can I select one plus sign from the five

plus signs?” On the other hand, if we were to ask, how many ways are there to

sum to six using three positive integers, we’d want to calculate how

many ways we can select two plus signs from those five plus signs, and so

forth. This question is answered, in general, using combinations.

Recall that a combination is a selection of items from a collection such that the order of the selection does not matter. The number of ways in which one may select items from among available items is given by,

By this argument we see that, in general, the number of ways we can sum to exactly using positive integers, which we’ll denote as , is given by,

Using a six-sided die, for example, the number of ways we can sum to six with two throws is given by,

Note that the five corresponds to the number of possibilities enumerated in the example to the right. This all seems to hang together.

But Eq. requires the total number of outcomes whose sum is less than or equal to . That number will equal the sum the the number of ways we can reach for all :

where in the last step we’ve used the so-called hockey-stick identity of combinations.

This is a delightfully simple resultDelightful, but hardly surprising. Most interesting counting problems wend their way back to Pascal’s triangle eventually, it seems. that allows us to compute the probability of interest:

And this, in turn, allows us to calculate the probability in Eq. , . Using Eq. and ,

And now finally, we’re in a position to compute via Eq. .

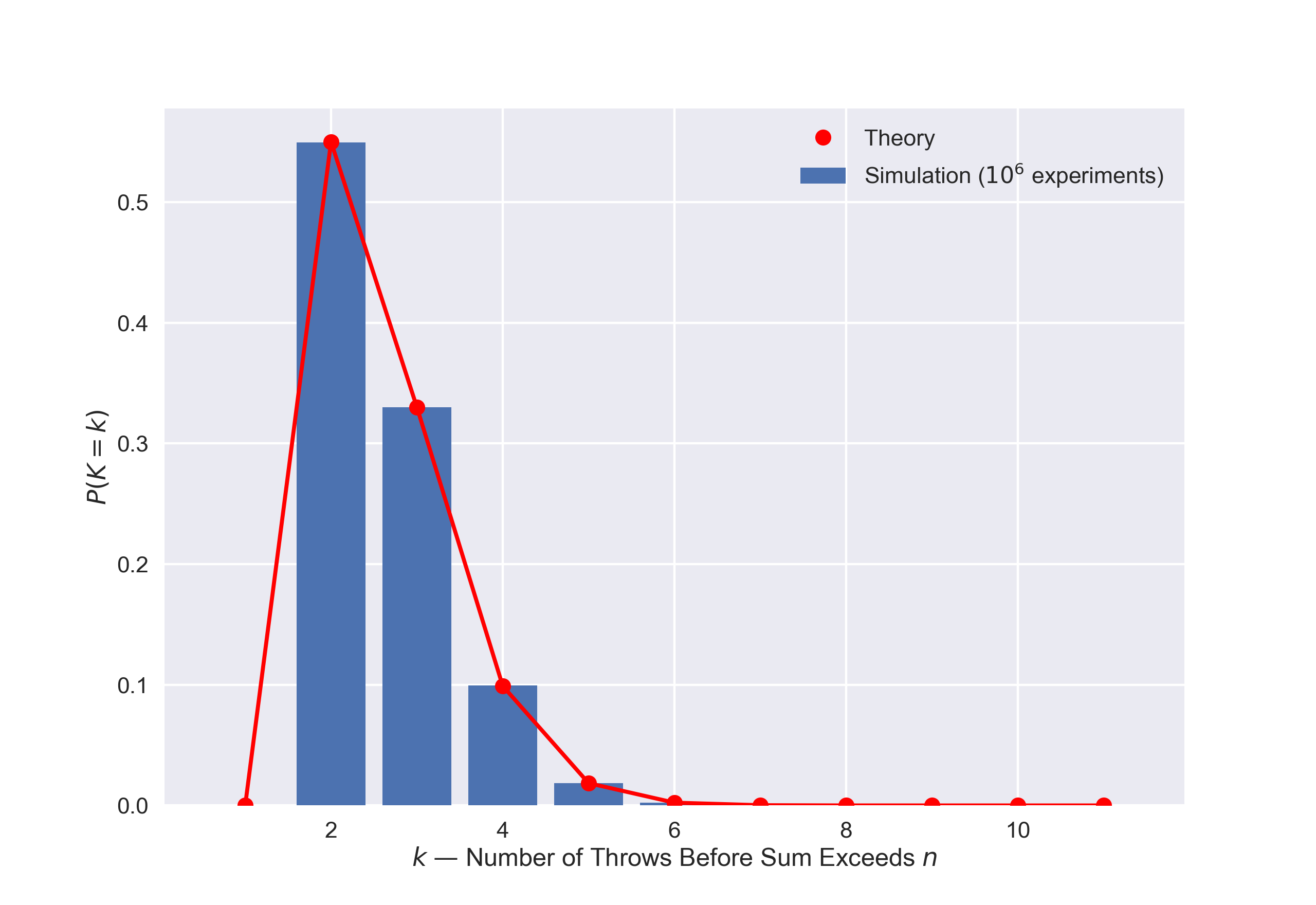

But, well, after all these perambulations, we might wonder if we’ve made a mistake. In these cases, it is prudent to turn to numerical simulation to verify our analytic results. Let’s check our sanity by verifying Eq. by way of simulation. We do this by simulating our die throwing experiment in Python using a synthetic die with sides, and compare the empirically measured probabilities with our theoretically calculated results, via Eq. . The results of this, for simulated rounds, is presented in the figure below:

Now that we’ve convinced ourselves that we are correctly computing the probability , we are finally in a position to calculate the expectation we’re after.

Great Expectations

Before computing , let’s rearrange Eq. to make it a bit simpler. Note that with a little algebra we can write,

This allows us to write Eq. , with yet a bit more algebra, as,

Multiplying by and summing, as per Eq. , we obtain,

And there we are. For a die with sides we can now compute the expected number of throws we’ll need to exceed . What do we expect for a six-sided die? For , Eq. yields,

a number that is decidedly not Not that we’d expect it to be, of course; rational numbers tend never to be irrational. . But, interestingly, neither is it too far off. Let’s consider a die with more sides, say, :

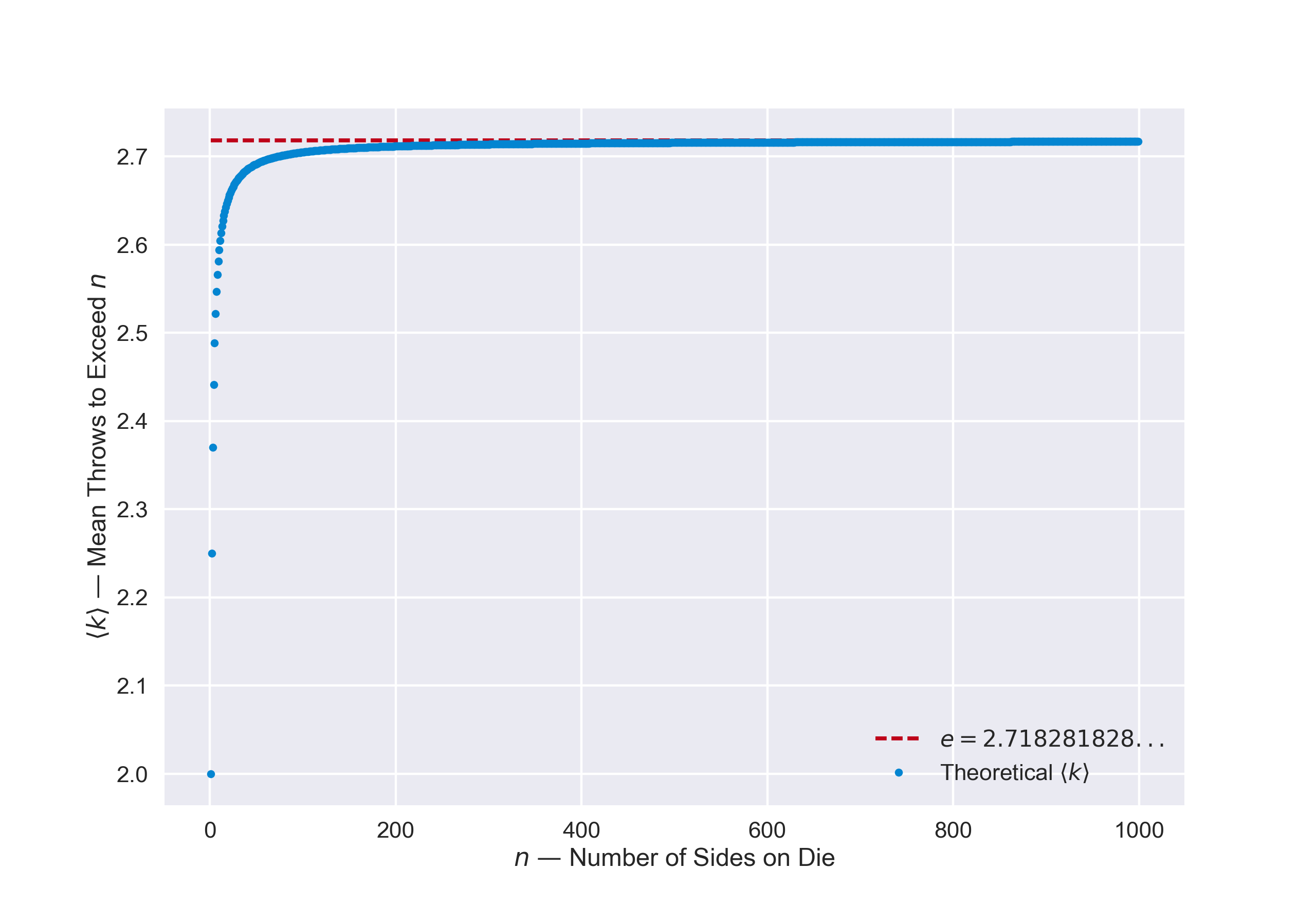

Even closer! Intrigued by these observations, let’s keep increasing the number of sides on our die. If we continue calculating the expectation for ever higher values of , we generate the following plot:

It appears that, as we let increase, the expected number of throws required for the sum to exceed converges to a number — and that number, empirically at least, appears to be . But can we prove it? Sure we can.

Let’s write out Eq. in all its glory,

Now consider the case where . The above expression simplifies,

And now note that we can change the lower limit of the summation; rather than starting at , we can start at , provided we replace all the terms that appear in our summand with . Doing this we obtain,

where, again, this holds for . This may begin to look familiar. In particular, recall the binomial theorem, which states that,

In our present situation, letting , we can write our expectation as,

And we’ve recovered the definition of Euler’s number, à la Eq. !

Here is where I find emerge organically, in a sense. For all finite values of , the expectation is a rational number. But as the number of sides increases, the combinatorics of how the throws can sum to exceed evolves in just the right way such that—as gets really large—it starts to converge to the binomial expansion of , which in the limit turns out to be exactly the definition of . And for my part, at least, I can start to see a glimmer of an answer to that primal question: who ordered that?Of course, you might argue, the other proofs I’ve cited could be brought to this same point—the binomial expansion could ultimately be reconstructed from those calculations as well. Certainly, I’d agree, they must all be equivalent at some level, but seeing the binomial theorem emerge directly from the combinatorics of die throws and lead to the very definition of Euler’s constant makes me feel happy inside.

From Discrete Back to Continuous

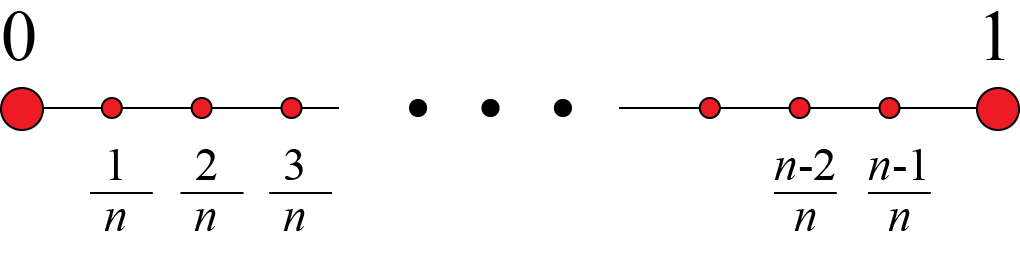

As heads off to infinity, our discrete problem becomes equivalent to the original, continuous problem introduced at the start. To better see the equivalence, note we can map the discrete problem onto the unit interval, as in the following illustration.

Rather than considering an -sided die, we partition the unit interval into subintervals. At each step, we then sample a number uniformly from the interval , and select as our value the uppermost limit of whatever subinterval the sample falls into. We continue to select values in this manner until their sum exceeds one, just as in the original formulation of the problem—but now we’re working in the context of a discrete problem. And now, as , discrete meets continuous, and by the arguments advanced above, we’ve proven the original assertion.